Best PC platform for running Esxi/Docker at home?

-

@monte said in Best PC platform for running Esxi/Docker at home?:

@NeverDie to be honest, I don't rememver :) It was so long ago, I was just updating the system from then. But I guess there might be an option in the installer... I will try it in vm, you made me curious :)

UPDATE: I've just ran the install and it plain and simple, no extra options. It automatically partitioned the disk. The only drawback is that you don't have ability to fine tune the sizes of partitions, but that can be done later with some LVM magic :)

That's what I started with too, but then when I went to upload ISO's or create VM's, ProxMox said there was no disk available for that.

-

@NeverDie that's strange. What's the size of your disk? Also you can see LVM structure with

lvdisplaycommand. As I've mentioned, you also can manage sizes of said LV's in the console.@monte said in Best PC platform for running Esxi/Docker at home?:

What the size of your disk?

1 terabyte. It's a Samsung nvme SSD.

-

I've tried googling up instructions on how to do it exactly. It seems that others besides just me have struggled with this as well. The only solution proposed which someone claimed worked, which I haven't yet tried, seems to be to start with a Debian install and then upgrade it to ProxMox. But there were no simple 123 instructions on how to do that either, so I just threw in the towel and decided to go with 3 disks, like in the beginner tutorial.

-

@monte said in Best PC platform for running Esxi/Docker at home?:

What the size of your disk?

1 terabyte. It's a Samsung nvme SSD.

-

@NeverDie this is completely doable within proxmox installation. Show me please the return of

lvdisplay.

Or if you already have set up your system, then let it be :) -

Better yet, I'll reinstall it to the one 1Tb disk and you the lvdisplay of that, which will make more sense in this context.

-

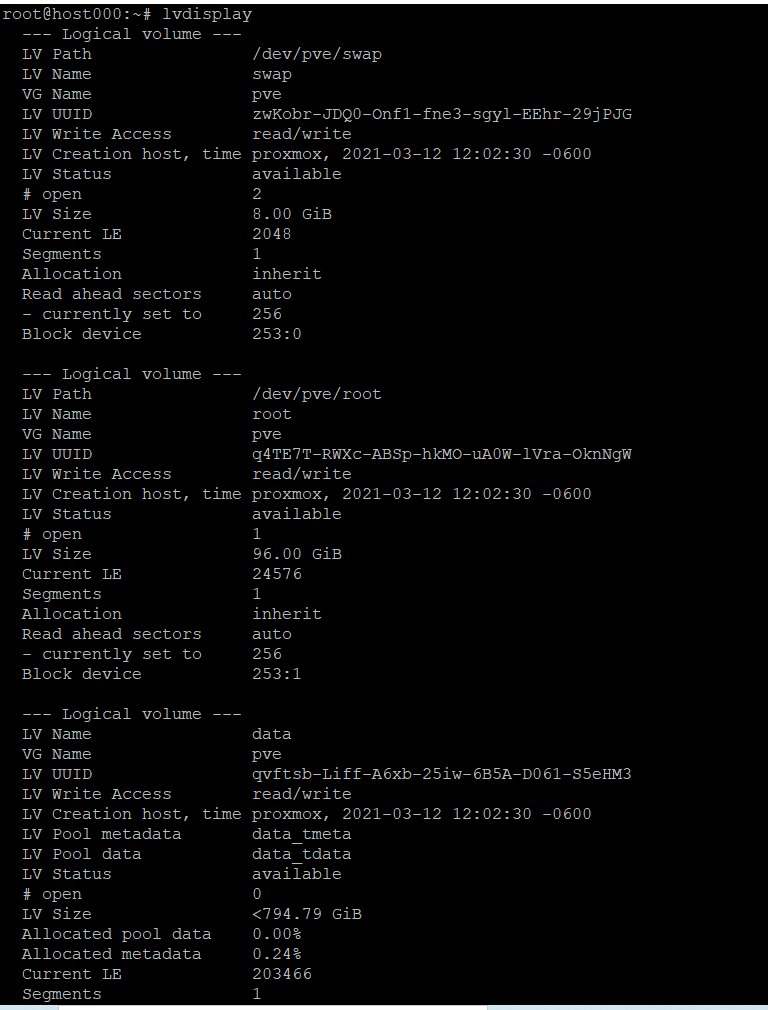

OK, did that.

This is with proxmox installed to the single 1 Tb disk. No USB's involved. -

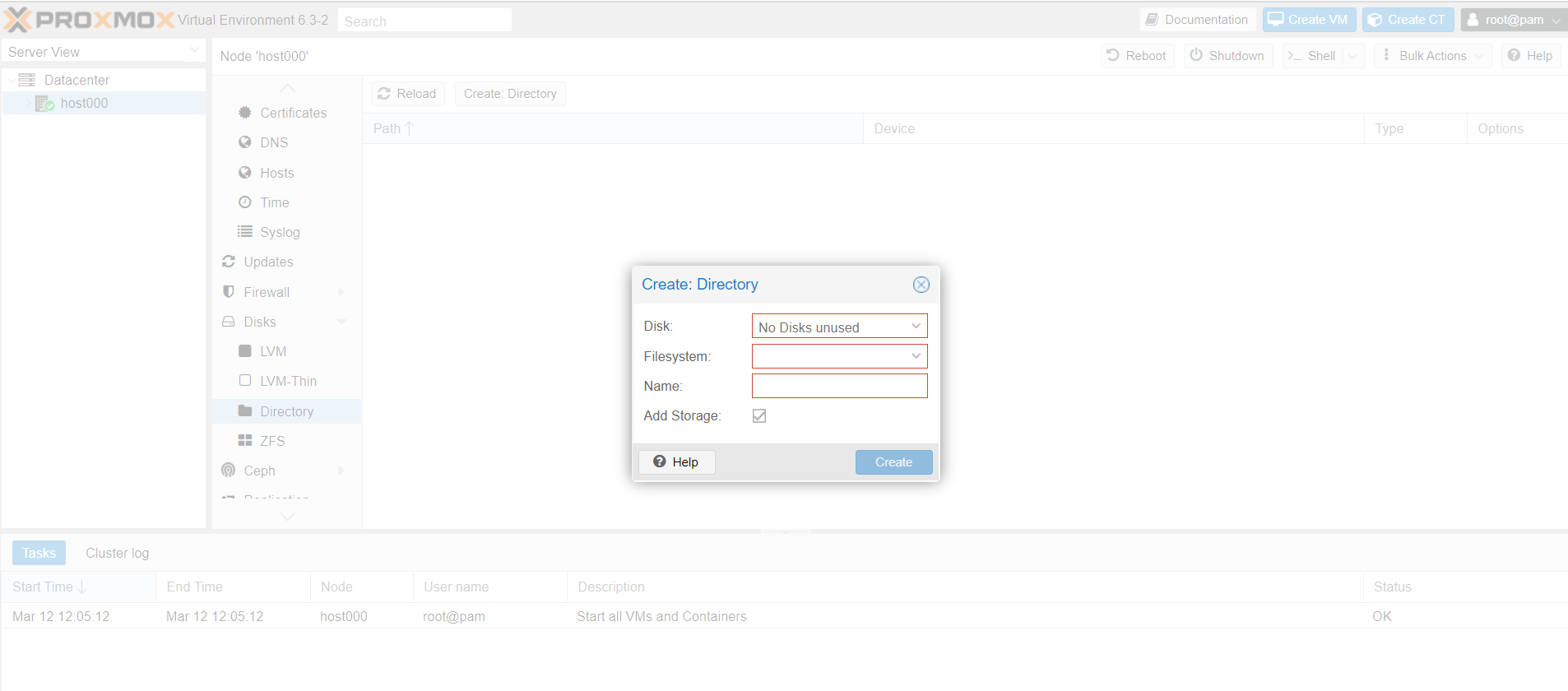

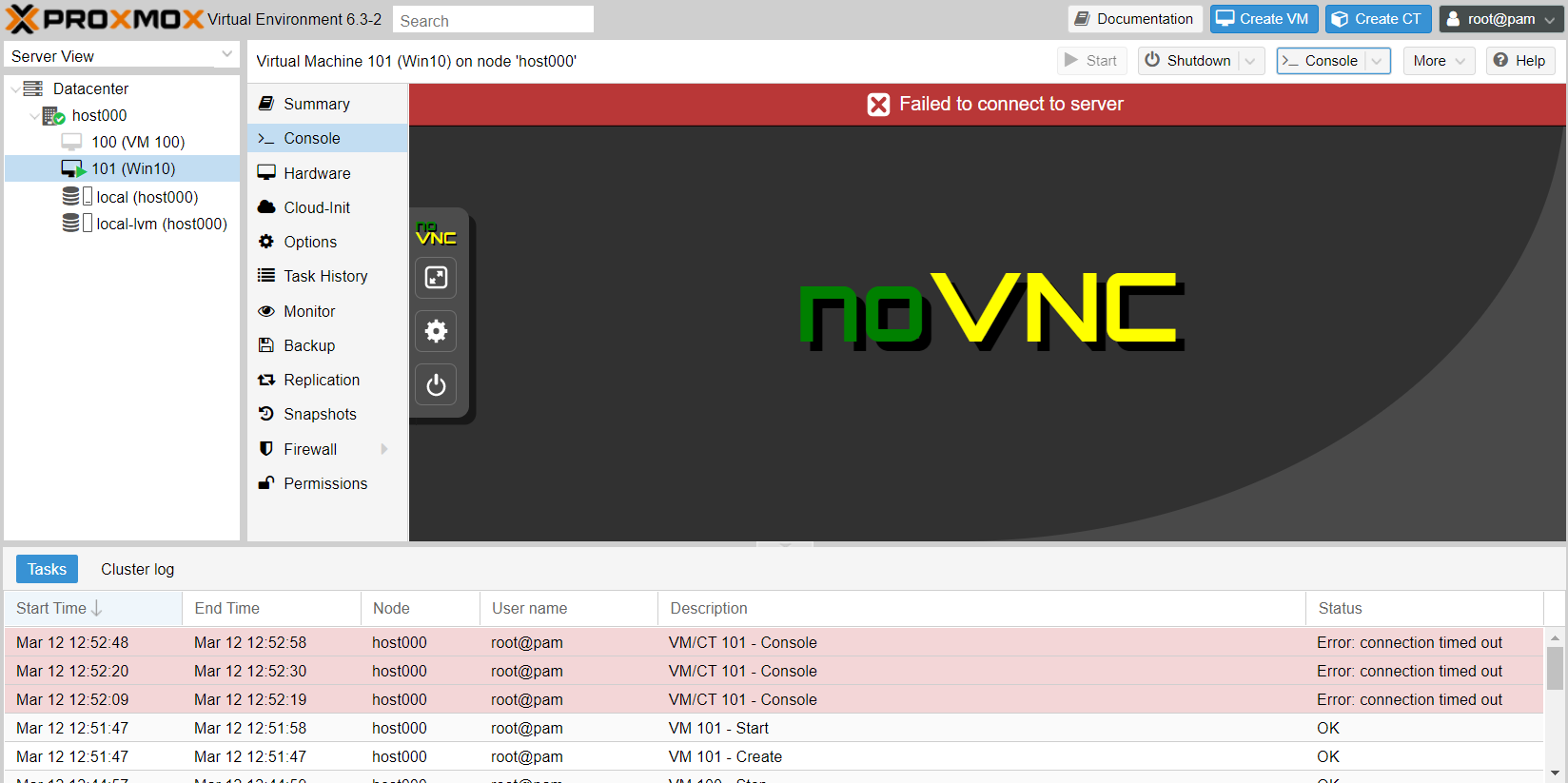

@monte Right. But then I get this:

which is where I get stuck. If I add more physical disks, then this doesn't happen. -

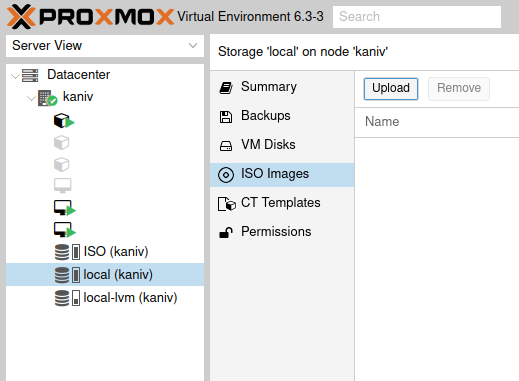

@NeverDie expand

host000item in the list on the left. Choose storagelocalat the end of the list, chooseISO Images, hitUpload.

Then press create VM on the top right of the GUI. -

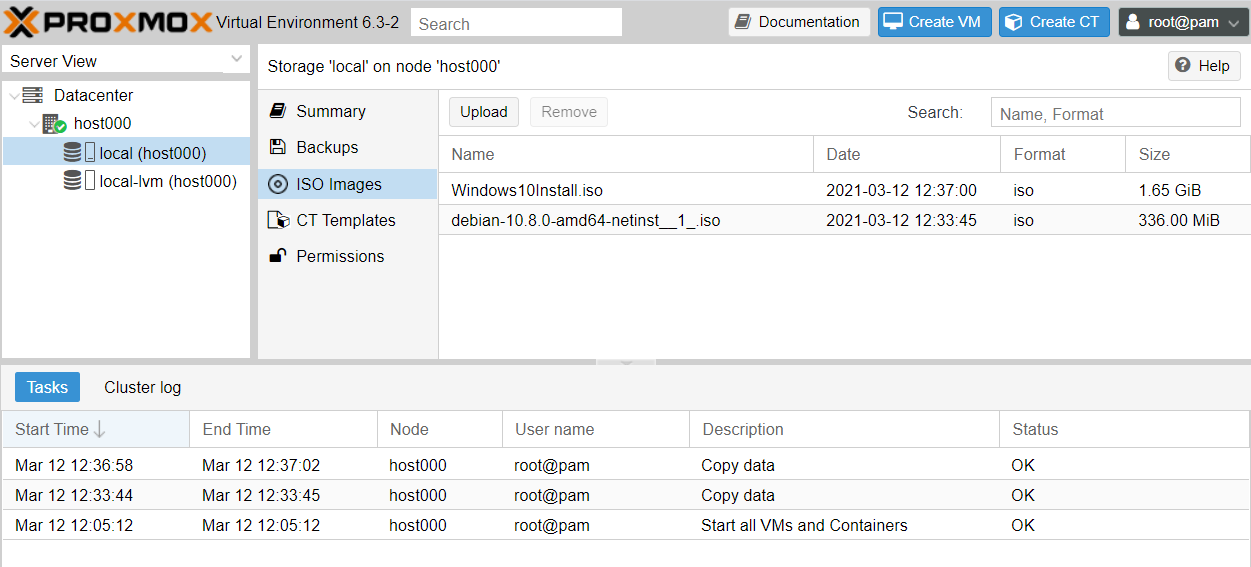

@monte Thank you! That worked:

I uploaded both debian and windows iso's.

So, after starting the VM I gather I just click "monitor" to see the VM's virtual display? -

@NeverDie no, you use

Consolejust belowSummarybutton.

I must add, I never installed windows in proxmox, so I can't say how install process is managed with it, but I think someone on google knows :) -

@monte Right. Just tried it, but got this:

Well, I won't burden you with more questions. Thanks for your help! -

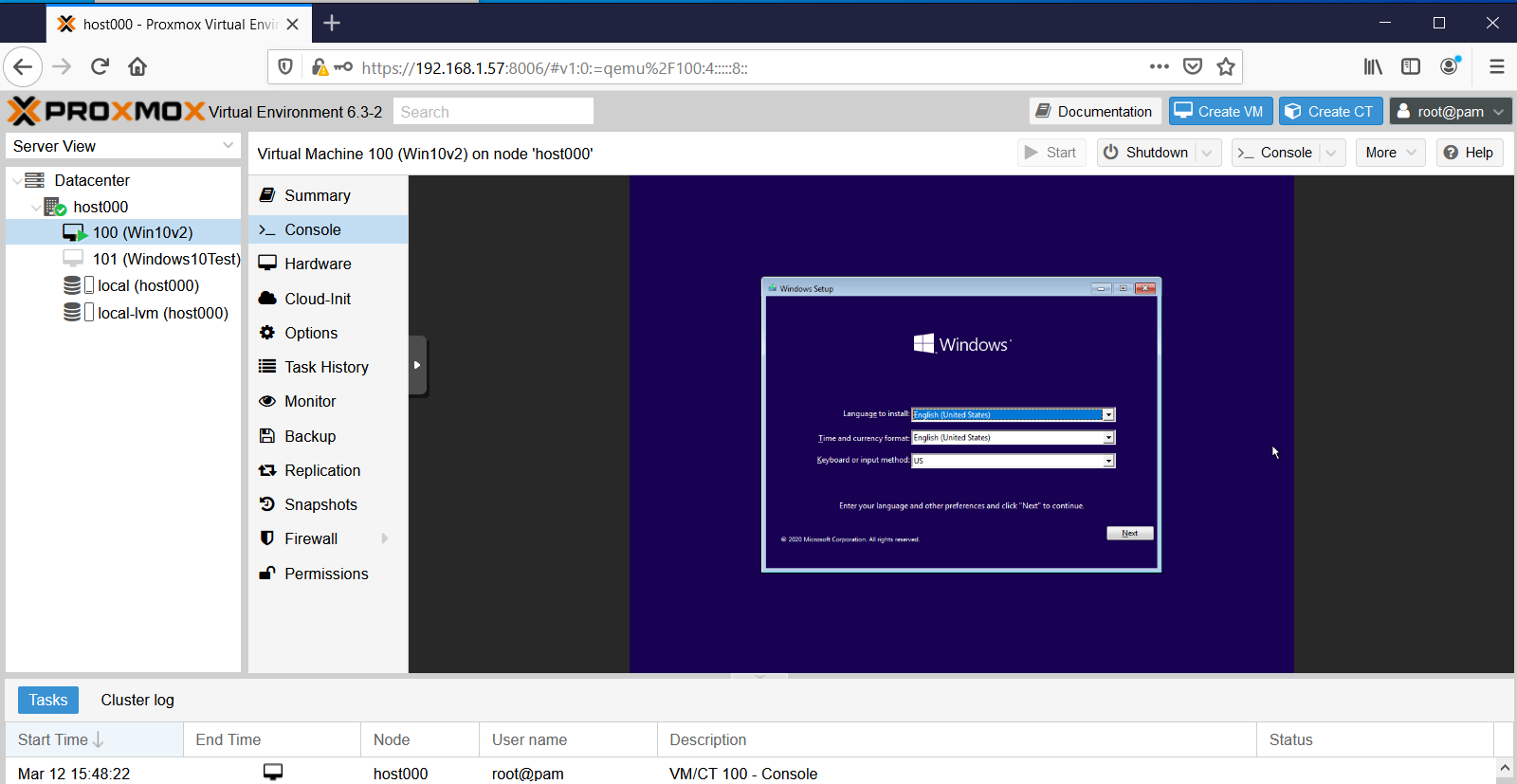

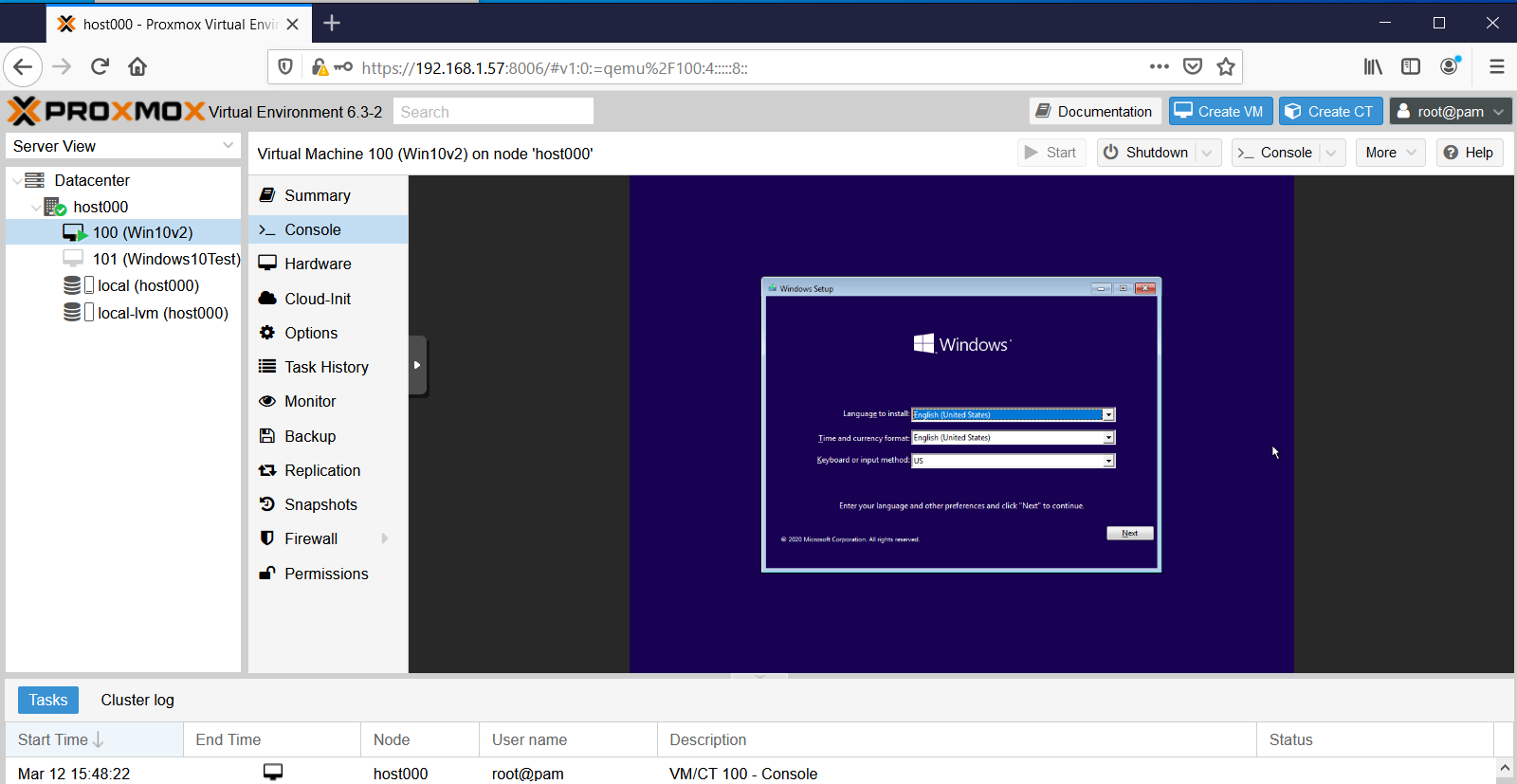

Reporting back: After trying many things and much frustration :dizzy_face: , It turned out the reason for the noVnc console fail was, of all things, Google Chrome. :confounded:

Who'd have guessed? Thank you Google. :angry: :angry: :angry: Too bad there's no emoji for sarcasm. :rolling_on_the_floor_laughing: Actually, I like Google Chrome a lot, so I hadn't even suspected it. Switching to Firefox fixed the issue:

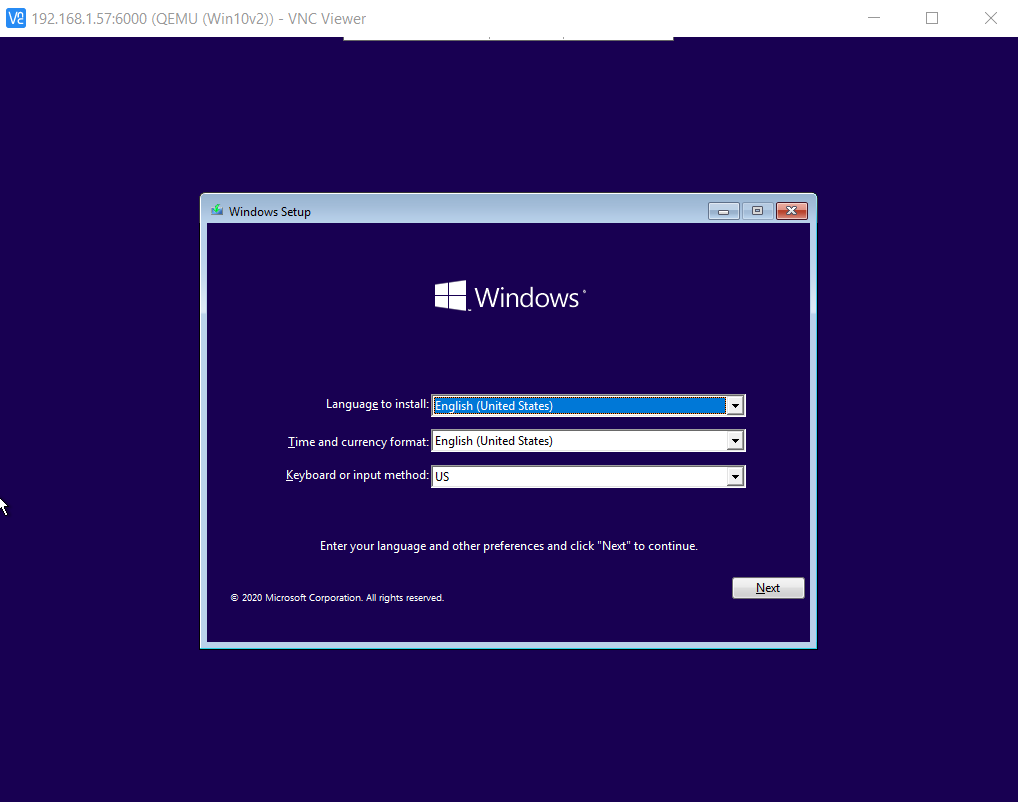

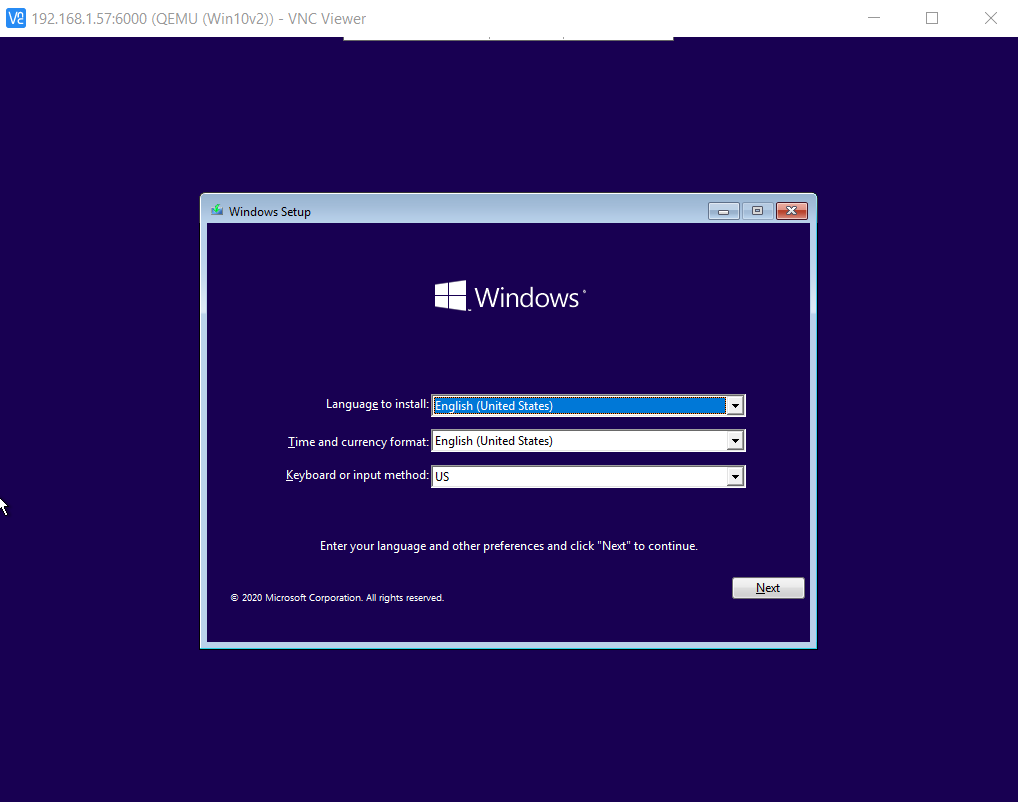

I'm also able to connect to it using VNC Viewer:

by typing:

change vnc 0.0.0.0:100

into the VM's monitor window, as described here: https://pve.proxmox.com/wiki/VNC_Client_Access#Standard_Console_AccessI even tried installing the noVNC google chrome extension, but still no joy. No idea as to why Chrome failed for this, but at least now the culprit is identified. :smile:

-

Reporting back: After trying many things and much frustration :dizzy_face: , It turned out the reason for the noVnc console fail was, of all things, Google Chrome. :confounded:

Who'd have guessed? Thank you Google. :angry: :angry: :angry: Too bad there's no emoji for sarcasm. :rolling_on_the_floor_laughing: Actually, I like Google Chrome a lot, so I hadn't even suspected it. Switching to Firefox fixed the issue:

I'm also able to connect to it using VNC Viewer:

by typing:

change vnc 0.0.0.0:100

into the VM's monitor window, as described here: https://pve.proxmox.com/wiki/VNC_Client_Access#Standard_Console_AccessI even tried installing the noVNC google chrome extension, but still no joy. No idea as to why Chrome failed for this, but at least now the culprit is identified. :smile:

@NeverDie I am happy, that you've made a progress! I couldn't help you with that, cause I am using Firefox by default :)

Strange, I've just tried in Chromium with Lubuntu as guest OS and it works nice...don't have Chrome installed, so can't check the exact issue you have. -

To save searching time for anyone else who also wants to run a Windows VM on ProxMox, I found that this guy has the most concise yet complete setup instructions:

https://www.youtube.com/watch?v=6c-6xBkD2J4 -

A couple more gotcha's I ran across.

-

To get balloon memory working properly, you still need to go through the procedure that starts at around timestamp 14:30 on the youtube video that @monte posted above, where you use Windows PowerShell to activate the memory Balloon service.

-

Interacting directly with the Window's VM using just VNC is sluggish. Allegedly the way around this is to use Windows Remote Desktop, rather than VNC, to connect to the Windows VM. However, here's the gotcha if you opt to go that route: the windows VM needs to be running Windows Pro. It turns out that Windows Home doesn't support serving Windows Desktop from the VM. :confounded: Gotcha!

-

-

Reporting back: I've been able to create a a ZFS RAIDZ3 pool on my proxmox server, which is a nice milestone. I also just today discovered that fairly easily during installation the ProxMox bootroot can also be installed onto a ZFS pool of its own--pretty cool!:sunglasses: --which means the root itself can be configured to run at RAIDZ1/2/3, offering it the same degree of protection as the data entrusted to it . A reputable 128GB SSD costs just $20 these days, so I'll try combining four (4) of them as a RAIDZ1 boot disk for the root. I'd settle for 64GB SSD's or even less, but, practicially speaking, 128GB seems to be the new minimum for what's being manufactured by the reputable brands. Used 32/64GB SSD's on ebay are on offer for nearly the same price, or even more, than brand new 128GB SSDs. Go figure. It makes no sense if you aren't beholden to a particular part or model number.

All this means that I'll have a zpool boot drive with effectively 3x120GB=360GB of useable space, so that will be plenty to house ISO's and VM's as well, which means that from the get-go and onward everything (the root, the ISO's, the VM's, as well as the files entrusted to them) will be ZFS ZRAID protected. Pretty slick! :smiley:

I suppose the next thing after that would be to build a ZRAID nvme cache to hopefully get a huge jump in speed. Most motherboards aren't blessed with 4 nvme M.2 slots, but there do exist quad nvme combo boards so that four nvme SSD's that can be slotted into a single PCIE-3 slot.

-

It turns out that to get full advantage of running four nvme SSD drives you'd need at minimum pci-e 3.0 x16 with motherboard support for bifurcation, and mine is just short of that with pci-e 3.0 x8. Nonetheless, the max speed for pci-e 3.0 x8 is 7880MB/s, which is 14x the speed of a SATA SSD (550MB/s), and more than enough to saturate even 40GE ethernet. So, for a home network that suggests running apps on the server itself in virtual machines and piping the video to thin clients through something like VNC. I figure that should be noticeably faster performance for everyone than the current situation, which is everyone running their own PC. How well that theory works in practice.... I'm not sure.