Best PC platform for running Esxi/Docker at home?

-

@monte Thank you! That worked:

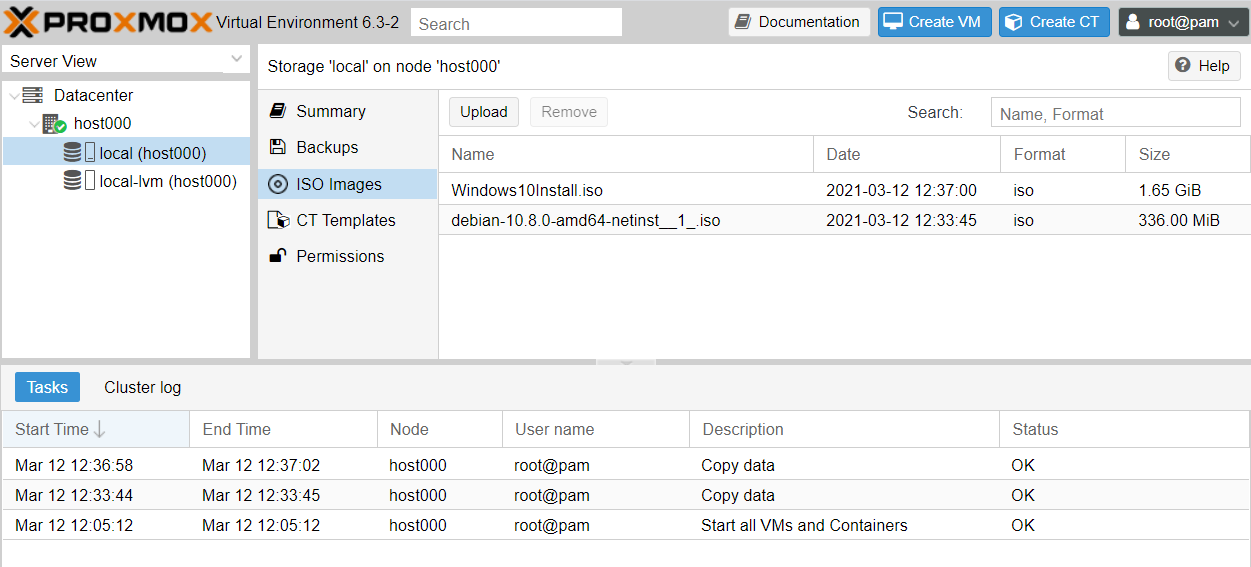

I uploaded both debian and windows iso's.

So, after starting the VM I gather I just click "monitor" to see the VM's virtual display? -

@NeverDie no, you use

Consolejust belowSummarybutton.

I must add, I never installed windows in proxmox, so I can't say how install process is managed with it, but I think someone on google knows :) -

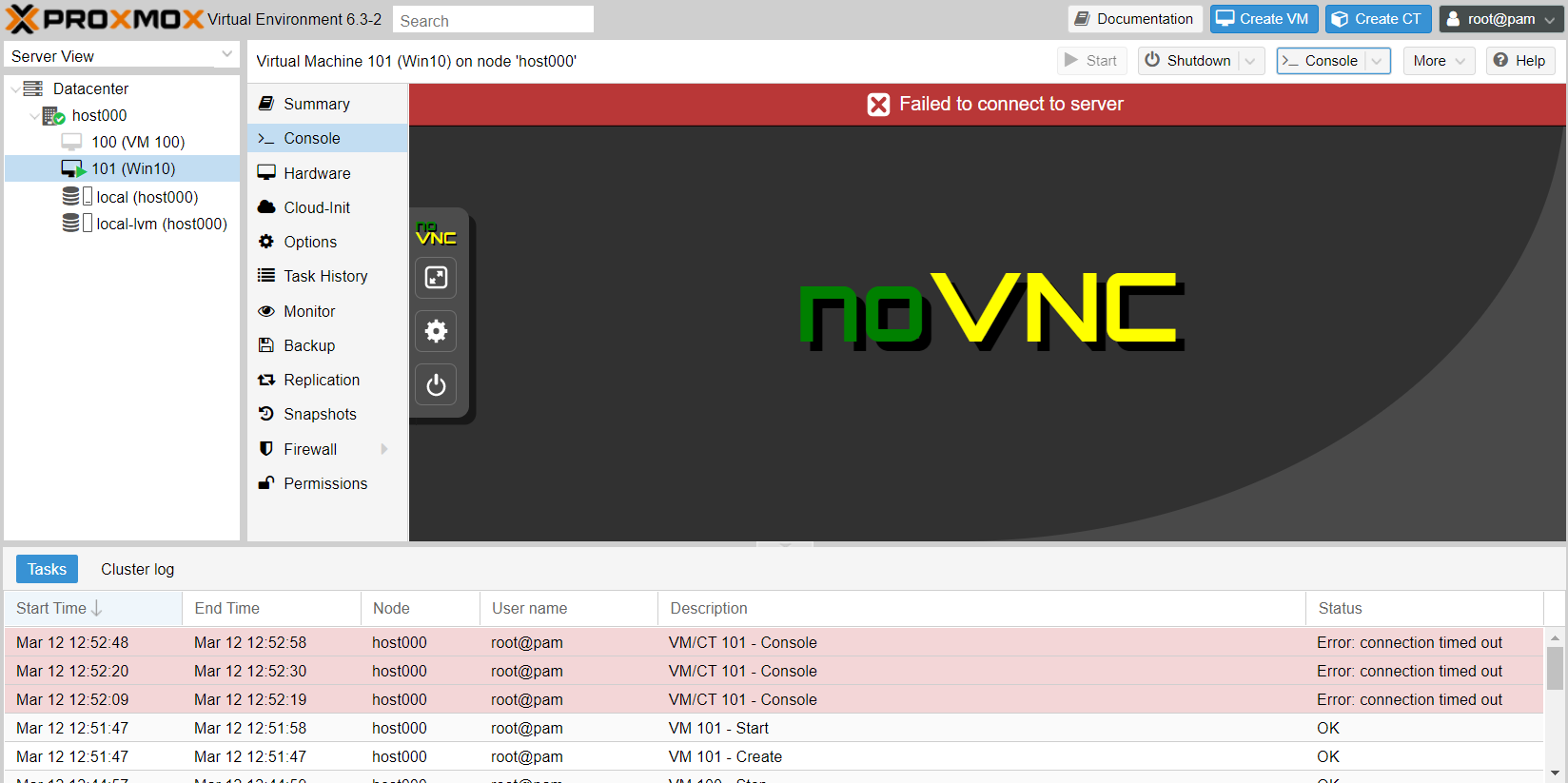

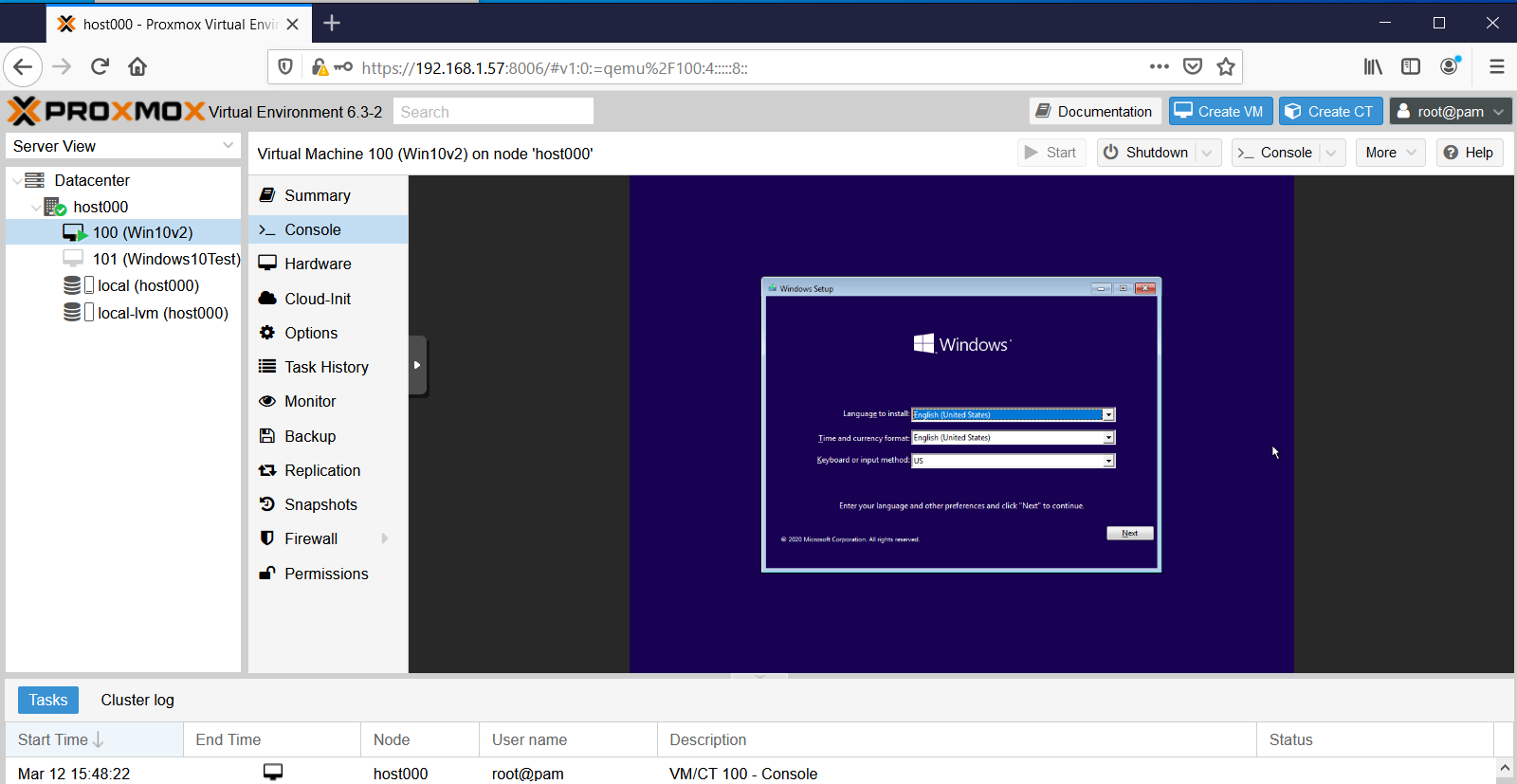

@monte Right. Just tried it, but got this:

Well, I won't burden you with more questions. Thanks for your help! -

Reporting back: After trying many things and much frustration :dizzy_face: , It turned out the reason for the noVnc console fail was, of all things, Google Chrome. :confounded:

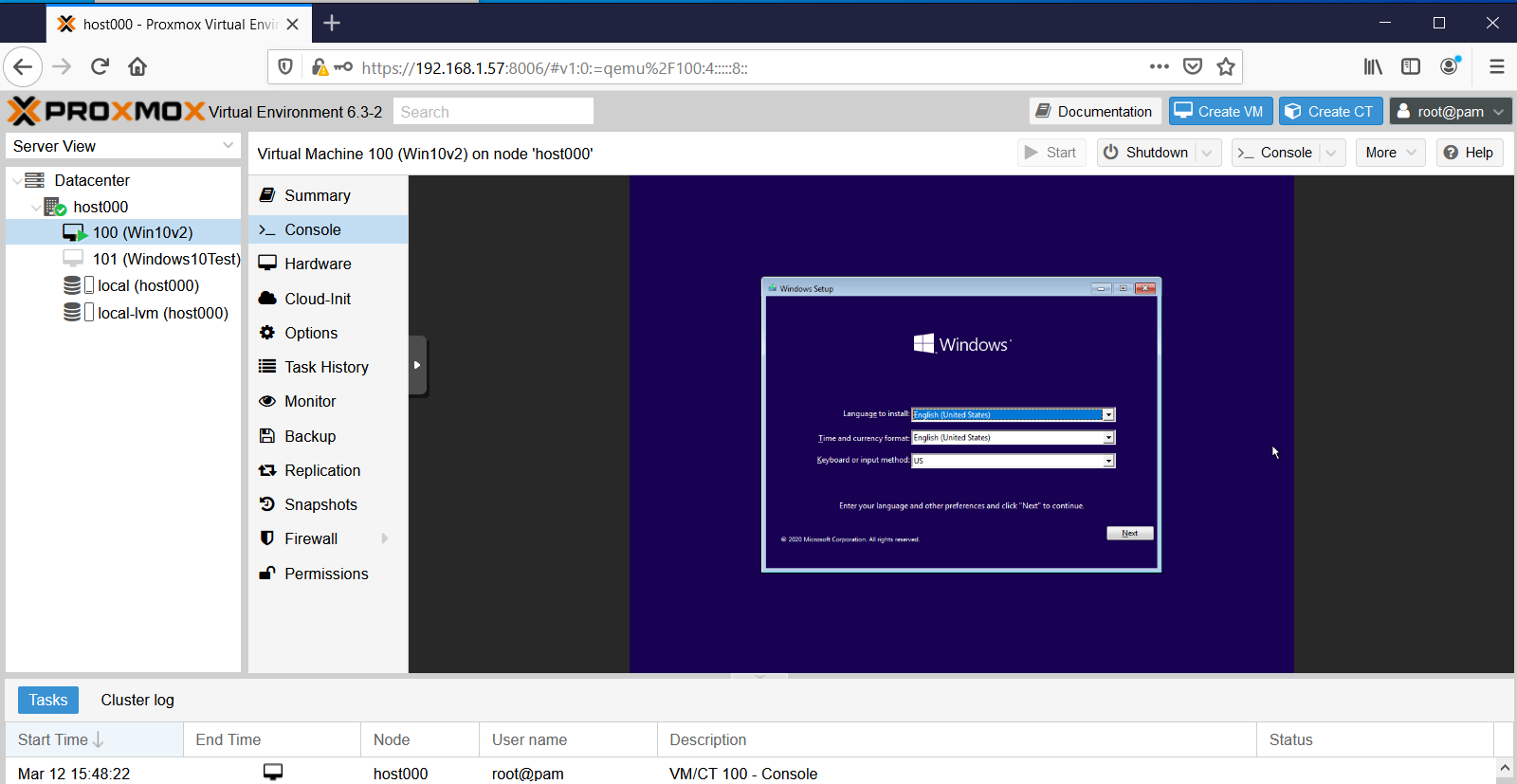

Who'd have guessed? Thank you Google. :angry: :angry: :angry: Too bad there's no emoji for sarcasm. :rolling_on_the_floor_laughing: Actually, I like Google Chrome a lot, so I hadn't even suspected it. Switching to Firefox fixed the issue:

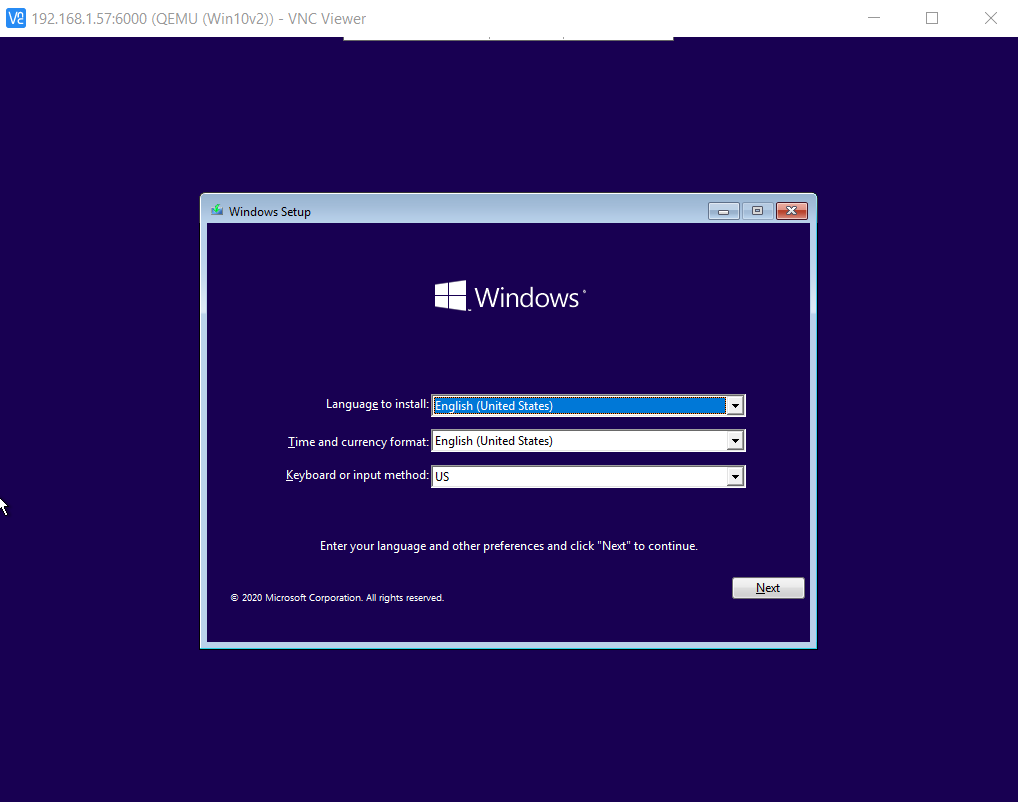

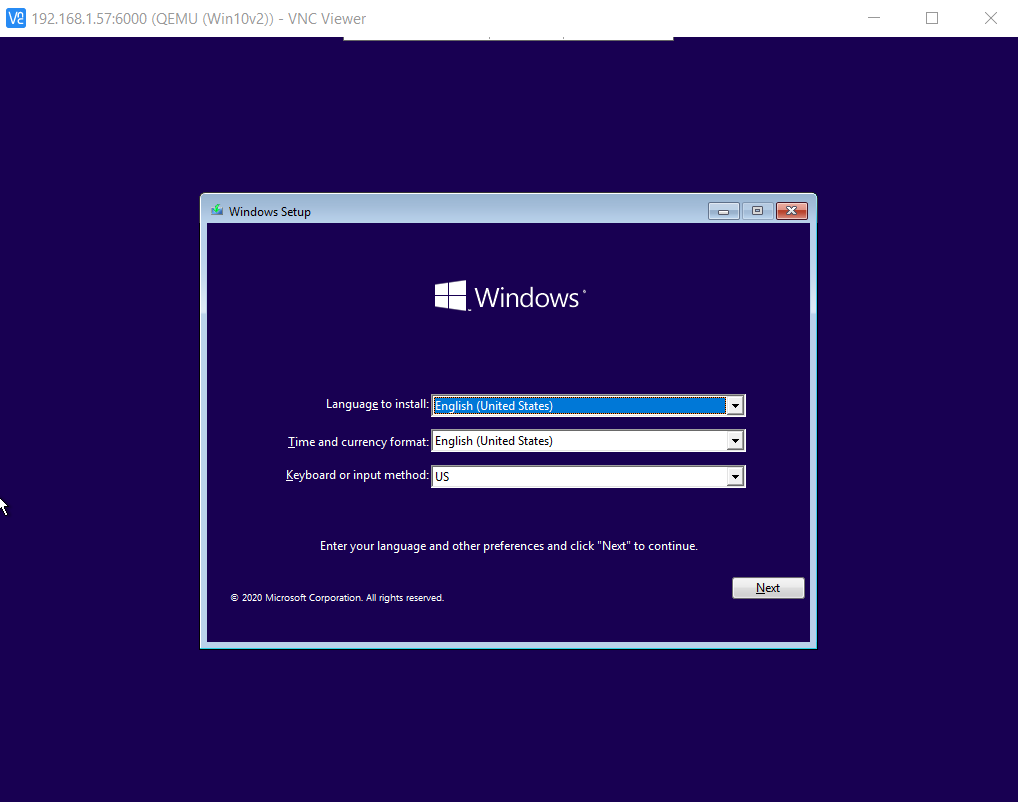

I'm also able to connect to it using VNC Viewer:

by typing:

change vnc 0.0.0.0:100

into the VM's monitor window, as described here: https://pve.proxmox.com/wiki/VNC_Client_Access#Standard_Console_AccessI even tried installing the noVNC google chrome extension, but still no joy. No idea as to why Chrome failed for this, but at least now the culprit is identified. :smile:

-

Reporting back: After trying many things and much frustration :dizzy_face: , It turned out the reason for the noVnc console fail was, of all things, Google Chrome. :confounded:

Who'd have guessed? Thank you Google. :angry: :angry: :angry: Too bad there's no emoji for sarcasm. :rolling_on_the_floor_laughing: Actually, I like Google Chrome a lot, so I hadn't even suspected it. Switching to Firefox fixed the issue:

I'm also able to connect to it using VNC Viewer:

by typing:

change vnc 0.0.0.0:100

into the VM's monitor window, as described here: https://pve.proxmox.com/wiki/VNC_Client_Access#Standard_Console_AccessI even tried installing the noVNC google chrome extension, but still no joy. No idea as to why Chrome failed for this, but at least now the culprit is identified. :smile:

@NeverDie I am happy, that you've made a progress! I couldn't help you with that, cause I am using Firefox by default :)

Strange, I've just tried in Chromium with Lubuntu as guest OS and it works nice...don't have Chrome installed, so can't check the exact issue you have. -

To save searching time for anyone else who also wants to run a Windows VM on ProxMox, I found that this guy has the most concise yet complete setup instructions:

https://www.youtube.com/watch?v=6c-6xBkD2J4 -

A couple more gotcha's I ran across.

-

To get balloon memory working properly, you still need to go through the procedure that starts at around timestamp 14:30 on the youtube video that @monte posted above, where you use Windows PowerShell to activate the memory Balloon service.

-

Interacting directly with the Window's VM using just VNC is sluggish. Allegedly the way around this is to use Windows Remote Desktop, rather than VNC, to connect to the Windows VM. However, here's the gotcha if you opt to go that route: the windows VM needs to be running Windows Pro. It turns out that Windows Home doesn't support serving Windows Desktop from the VM. :confounded: Gotcha!

-

-

Reporting back: I've been able to create a a ZFS RAIDZ3 pool on my proxmox server, which is a nice milestone. I also just today discovered that fairly easily during installation the ProxMox bootroot can also be installed onto a ZFS pool of its own--pretty cool!:sunglasses: --which means the root itself can be configured to run at RAIDZ1/2/3, offering it the same degree of protection as the data entrusted to it . A reputable 128GB SSD costs just $20 these days, so I'll try combining four (4) of them as a RAIDZ1 boot disk for the root. I'd settle for 64GB SSD's or even less, but, practicially speaking, 128GB seems to be the new minimum for what's being manufactured by the reputable brands. Used 32/64GB SSD's on ebay are on offer for nearly the same price, or even more, than brand new 128GB SSDs. Go figure. It makes no sense if you aren't beholden to a particular part or model number.

All this means that I'll have a zpool boot drive with effectively 3x120GB=360GB of useable space, so that will be plenty to house ISO's and VM's as well, which means that from the get-go and onward everything (the root, the ISO's, the VM's, as well as the files entrusted to them) will be ZFS ZRAID protected. Pretty slick! :smiley:

I suppose the next thing after that would be to build a ZRAID nvme cache to hopefully get a huge jump in speed. Most motherboards aren't blessed with 4 nvme M.2 slots, but there do exist quad nvme combo boards so that four nvme SSD's that can be slotted into a single PCIE-3 slot.

-

It turns out that to get full advantage of running four nvme SSD drives you'd need at minimum pci-e 3.0 x16 with motherboard support for bifurcation, and mine is just short of that with pci-e 3.0 x8. Nonetheless, the max speed for pci-e 3.0 x8 is 7880MB/s, which is 14x the speed of a SATA SSD (550MB/s), and more than enough to saturate even 40GE ethernet. So, for a home network that suggests running apps on the server itself in virtual machines and piping the video to thin clients through something like VNC. I figure that should be noticeably faster performance for everyone than the current situation, which is everyone running their own PC. How well that theory works in practice.... I'm not sure.

-

Final report: I tried it out, but having to run a Windows thin-client to connect via Windows Remote Desktop to a Windows VM running on a server was a buzzkill. The hardest case I've tried is watching high definition youtube videos. The server can handle the workload even without a graphics card and still not max out the CPU cores. It works, but the visual fidelity goes down--I guess because it's first displayed on a VM screen inside the VM on the server and then re-encoded and pumped to the thin-client? Not sure if that's what's actually happening but if so maybe there's a way to around that that doesn't inolve a re-encoding. Ideally, being able to losslessly connect to any VM from, say, a chromebook would have been nice.

Lastly, I have been able to move VM's from one physical machine to another and get them to run. Maybe I'm doing it wrong, but for my first attempt I did it by first "backing up" the VM to the server using ProxMox Backup Server, and then "restoring" the VM to the target machine (in my test case, the same physical machine as the one running ProxMox Backup Server). Doing it this way I encountered two bottlenecks: #1. moving the VM over 1 gigabit ethernet takes a while. However, upgrading to 10-gigabit ethernet or faster might address that. #2 Even longer, than #1, the "restoring" process takes quite a while, even though in my test case it didn't involve sending anything over ethernet. This was a surprise. I'm not sure why the "restoring" takes so long, but it takes much longer than doing the original backup. Unless the "restoring" is doing special magic to adjust the VM to run on the new target machine hardware, then in theory doing just a straight copy of the VM file without any "restoring" should be much, much faster. I'll try that next.

-

Final report: I tried it out, but having to run a Windows thin-client to connect via Windows Remote Desktop to a Windows VM running on a server was a buzzkill. The hardest case I've tried is watching high definition youtube videos. The server can handle the workload even without a graphics card and still not max out the CPU cores. It works, but the visual fidelity goes down--I guess because it's first displayed on a VM screen inside the VM on the server and then re-encoded and pumped to the thin-client? Not sure if that's what's actually happening but if so maybe there's a way to around that that doesn't inolve a re-encoding. Ideally, being able to losslessly connect to any VM from, say, a chromebook would have been nice.

Lastly, I have been able to move VM's from one physical machine to another and get them to run. Maybe I'm doing it wrong, but for my first attempt I did it by first "backing up" the VM to the server using ProxMox Backup Server, and then "restoring" the VM to the target machine (in my test case, the same physical machine as the one running ProxMox Backup Server). Doing it this way I encountered two bottlenecks: #1. moving the VM over 1 gigabit ethernet takes a while. However, upgrading to 10-gigabit ethernet or faster might address that. #2 Even longer, than #1, the "restoring" process takes quite a while, even though in my test case it didn't involve sending anything over ethernet. This was a surprise. I'm not sure why the "restoring" takes so long, but it takes much longer than doing the original backup. Unless the "restoring" is doing special magic to adjust the VM to run on the new target machine hardware, then in theory doing just a straight copy of the VM file without any "restoring" should be much, much faster. I'll try that next.

-

Reporting back: I'd like to find a BTRFS equivalent to ProxMox, because although I like the reliability that ZFS can, in theory, offer, it's simply not a native linux file system. As a result, if I want to backup VM's to a zpool dataset on, say, a truenas server using ProxMox Backup Server, I have to first reserve a giant fixed size chunk of storage on a Truenas ZFS dataset by creating a ZVOL on it, and then I have to overlay that ZVOL with a linux file system like ext4 so that ProxMox Backup Server can read/write the backups on the ZVOL using iSCSI. However, I've got to believe that doing it this way largely undermines the data integrity that ZFS is offering, and it also means I have a big block of reserved ZVOL storage to handle the worst-case that will be largely underutilized compared to if ProxMox could read/write ZFS datasets natively.

So, instead of doing that, which I did get to "work", I've decided to set up a regular linux ext4 logical volume on an NVME accessible to ProxMox for storing VM's and creating VM backups, because ProxMox has no trouble reading/writing to an ext4 file system. I have ProxMox write its backups to that linux logical volume, and then I copy those relatively small files (well, small compared to the giant ZVOL I would otherwise have to reserve to contain them) over to a ZFS dataset on a TrueNas server (which is running inside a VM on ProxMox).

I don't yet know for sure, but I presume (?) BTRFS wouldn't require these kinds of contortions, since BTRFS should be native to the Linux operating system, just like ext4 is.

Footnote: The above isn't entirely accurate, because I can and do boot proxmox itself on a raidZ1, and ProxMox can happily write and backup VM's to that ZFS drive. However, the gotcha is that, for some reason, when writing directly to a ZFS file system, ProxMox insists on writing both the VMs and VM backups as raw block files instead of a much more compact qcow2. Ironically, if writing to an ext4 file system, Proxmox can write both VM's and VM backups in the qcow2 file format. So, a VM of, say, PopOS might occupy 3GiB as a qcow2 file, but ProxMox would write it as a 32GiB raw file, if that's the size I told ProxMox to allocate when I configured the VM. Or 64Gib if I wanted to cover a possible larger future PopOS configuration. That kind of size discrepancy adds up in a hurry as you create, backup, and manage more and more VM's. I thought automatic file compression might make it a moot point, but it doesn't seem to work as well on raw files as I would have guessed, maybe due to file leftovers within the raw block as files are moved around or shrunk or "deleted" within the disk image. A file system wouldn't see those remnants, but they'd still be in a disk image, and automatic compression on a raw disk image has no way of knowing what's purely leftover junk file remnants vs essential file data that's still in use.

Or perhaps the difference is at least partly because a Linux LVM specifically allows for thin provisioning?

Thin Provisioning A number of storages, and the Qemu image format qcow2, support thin provisioning. With thin provisioning activated, only the blocks that the guest system actually use will be written to the storage. Say for instance you create a VM with a 32GB hard disk, and after installing the guest system OS, the root file system of the VM contains 3 GB of data. In that case only 3GB are written to the storage, even if the guest VM sees a 32GB hard drive. In this way thin provisioning allows you to create disk images which are larger than the currently available storage blocks. You can create large disk images for your VMs, and when the need arises, add more disks to your storage without resizing the VMs' file systems. https://pve.proxmox.com/wiki/StorageOr maybe it's just the arbitrary current state of ProxMox development, and this difference will get fixed in the future? I have no idea.

I'm not really expecting anyone here knows the answer, so this is mostly just a follow-up as to how things went. Although not ideal, I can make do with the workaround I've outlined in this post.

-

Reporting back: I'd like to find a BTRFS equivalent to ProxMox, because although I like the reliability that ZFS can, in theory, offer, it's simply not a native linux file system. As a result, if I want to backup VM's to a zpool dataset on, say, a truenas server using ProxMox Backup Server, I have to first reserve a giant fixed size chunk of storage on a Truenas ZFS dataset by creating a ZVOL on it, and then I have to overlay that ZVOL with a linux file system like ext4 so that ProxMox Backup Server can read/write the backups on the ZVOL using iSCSI. However, I've got to believe that doing it this way largely undermines the data integrity that ZFS is offering, and it also means I have a big block of reserved ZVOL storage to handle the worst-case that will be largely underutilized compared to if ProxMox could read/write ZFS datasets natively.

So, instead of doing that, which I did get to "work", I've decided to set up a regular linux ext4 logical volume on an NVME accessible to ProxMox for storing VM's and creating VM backups, because ProxMox has no trouble reading/writing to an ext4 file system. I have ProxMox write its backups to that linux logical volume, and then I copy those relatively small files (well, small compared to the giant ZVOL I would otherwise have to reserve to contain them) over to a ZFS dataset on a TrueNas server (which is running inside a VM on ProxMox).

I don't yet know for sure, but I presume (?) BTRFS wouldn't require these kinds of contortions, since BTRFS should be native to the Linux operating system, just like ext4 is.

Footnote: The above isn't entirely accurate, because I can and do boot proxmox itself on a raidZ1, and ProxMox can happily write and backup VM's to that ZFS drive. However, the gotcha is that, for some reason, when writing directly to a ZFS file system, ProxMox insists on writing both the VMs and VM backups as raw block files instead of a much more compact qcow2. Ironically, if writing to an ext4 file system, Proxmox can write both VM's and VM backups in the qcow2 file format. So, a VM of, say, PopOS might occupy 3GiB as a qcow2 file, but ProxMox would write it as a 32GiB raw file, if that's the size I told ProxMox to allocate when I configured the VM. Or 64Gib if I wanted to cover a possible larger future PopOS configuration. That kind of size discrepancy adds up in a hurry as you create, backup, and manage more and more VM's. I thought automatic file compression might make it a moot point, but it doesn't seem to work as well on raw files as I would have guessed, maybe due to file leftovers within the raw block as files are moved around or shrunk or "deleted" within the disk image. A file system wouldn't see those remnants, but they'd still be in a disk image, and automatic compression on a raw disk image has no way of knowing what's purely leftover junk file remnants vs essential file data that's still in use.

Or perhaps the difference is at least partly because a Linux LVM specifically allows for thin provisioning?

Thin Provisioning A number of storages, and the Qemu image format qcow2, support thin provisioning. With thin provisioning activated, only the blocks that the guest system actually use will be written to the storage. Say for instance you create a VM with a 32GB hard disk, and after installing the guest system OS, the root file system of the VM contains 3 GB of data. In that case only 3GB are written to the storage, even if the guest VM sees a 32GB hard drive. In this way thin provisioning allows you to create disk images which are larger than the currently available storage blocks. You can create large disk images for your VMs, and when the need arises, add more disks to your storage without resizing the VMs' file systems. https://pve.proxmox.com/wiki/StorageOr maybe it's just the arbitrary current state of ProxMox development, and this difference will get fixed in the future? I have no idea.

I'm not really expecting anyone here knows the answer, so this is mostly just a follow-up as to how things went. Although not ideal, I can make do with the workaround I've outlined in this post.

@NeverDie yep, ZFS is a strange beast for me. I tried to avoid it, because I don't have no hardware nor application to utilize it's potential. But I was also afraid of LVM, and then one time I had to recover proxmox setup that I've messed up when was trying to migrate it to a bigger drive without reinstall. At one moment I thought that I've broken the whole volume group, but somehow I've got it back after few rebuilds from semi-broken backup image :)

It reminds me the name of this great movie: :)

-

Reporting Back: Good news! Rather than use iSCSI, I found that I could create a SAMBA mount point and then use that to connect with a ZFS dataset on TrueNAS, running on a virtual machine within ProxMox. This allows ProxMox to backup VM's to that as a regular ZFS dataset without having to pre-allocate storage, as was the case with a ZVOL. I've tested this out, and it works. So, it's all good now. I'm once again happy with Proxmox. :slightly_smiling_face: :smile: :smiley:

BTW, running a SAMBA share has the added advantage of being easily accessible from Windows File Explorer, making it an attractive bonus. Once set up, I'm finding that SAMBA (aka SMB) on TrueNAS for a mix of Linux and Windows machines on one network provides easy file sharing.

An unrelated but good find: BTRFS on regular linux works great, especially when combined with TimeShift for instant snapshot and rollbacks. A rollback does require a reboot to take effect, but it's nonetheless better than no rollback ability at all.. BTRFS is one of the guided install options on both Ubuntu and Linux Mint Cinnamon. For Microsoft Windows users, I think you'll find that the GUI on Linux Mint Cinnamon is a good match for your Windows intuitions. I'll probably settle on Mint Cinnamon for its stability and intuitive ease of use. The pre-configuring that went into Mint makes it much easier for new Linux users to pick up and immediately start using, as compared to a plain vanilla Debian install, which was my previous go-to because of Debian's high stability.

Of greater relevance: "Viritual Machine Manager" (VMM) is a QEMU-KVM hypervisor GUI that runs on Mint which looks strikingly similar to ProxMox during the process of configuring a new VM. I was looking into VMM as a BTRFS hypervisor alternative to ZFS ProxMox, and though not exactly apples-to-apples, on first-look VMM does look quite capable. Also, in some ways the VMM GUI looks both more detailed and more polished than ProxMox's GUI. I also looked very briefly (maybe too briefly) at Cockpit and at Gnome Boxes, but my first-impression was both looked like work-in-progress. Are there any other hypervisors, not already mentioned on this thread, that runs on top of BTRFS and is worth considering?

-

Since prices for both GPU's and Ryzen 9 5950x CPU's have gone crazy, I ended up ordering a Ryzen 5700G, which is 65w nominal. CPU performance is a little less than the 5800x (at 105w), but the 5700G is priced about the same and has integrated graphics. I'd like to use the 5700G as the host for virtual machines, and I'm wondering now whether the integrated graphics gets shared among multiple virtual machines or whether at most one VM can make use of it. Offhand, I'm not sure how I would even test for that, except maybe with some kind of graphics benchmark.

-

I've been looking at building a new server myself.. Currently I have an old hp desktop machine with an I5 / 16Gb of ram, running as server.. Works fine (and it was cheap). But I want to play more with docker / kubernetes, and perhaps do some more machinelearning on zoneminder images, so could use a cpu with a bit more oompf.. Perhaps an nvidia gfx for the ML part..

Only problem is that I'm not ready to spend 1000$ or more on hardware, so I'll probably just wait for the prices to drop again..

(Currently I just run ubuntu server, and then docker on top of that.. No VMs or the like..)

-

I've been looking at building a new server myself.. Currently I have an old hp desktop machine with an I5 / 16Gb of ram, running as server.. Works fine (and it was cheap). But I want to play more with docker / kubernetes, and perhaps do some more machinelearning on zoneminder images, so could use a cpu with a bit more oompf.. Perhaps an nvidia gfx for the ML part..

Only problem is that I'm not ready to spend 1000$ or more on hardware, so I'll probably just wait for the prices to drop again..

(Currently I just run ubuntu server, and then docker on top of that.. No VMs or the like..)

@tbowmo I have Nvidia GT1030 running yolo object detection on camera feeds with 7fps on full model and 20-30fps on yolo-tiny. Considering you don't need to process every frame your cameras produce and 1 or even 0.5 fps per camera is practical enough, you can get this thing going on a budget.

-

I've been looking at building a new server myself.. Currently I have an old hp desktop machine with an I5 / 16Gb of ram, running as server.. Works fine (and it was cheap). But I want to play more with docker / kubernetes, and perhaps do some more machinelearning on zoneminder images, so could use a cpu with a bit more oompf.. Perhaps an nvidia gfx for the ML part..

Only problem is that I'm not ready to spend 1000$ or more on hardware, so I'll probably just wait for the prices to drop again..

(Currently I just run ubuntu server, and then docker on top of that.. No VMs or the like..)

@tbowmo For the machine learning part I bet there are cloud based RTX cards you could use, or perhaps AI cloud servers. Last I checked, which was a while ago, Google was offering tensorflow in the cloud for free. I'm using nvidia Now, which is meant for gaming, but as a data point it offers access to a dedicated RTX3080 on a VM for a mere $10 per month. That's a trivially low price compared to buying physical RTX3080 hardware at current market rates.

-

@tbowmo For the machine learning part I bet there are cloud based RTX cards you could use, or perhaps AI cloud servers. Last I checked, which was a while ago, Google was offering tensorflow in the cloud for free. I'm using nvidia Now, which is meant for gaming, but as a data point it offers access to a dedicated RTX3080 on a VM for a mere $10 per month. That's a trivially low price compared to buying physical RTX3080 hardware at current market rates.

@NeverDie i prefer to keep video footage local. Not saying that I need an rtx3080/3090. They're too pricey. Looking more towards 1050ti or the like. Should be enough, if I'm not going for coral tpu. Waiting for a friend to get hold on some tpus, and give his verdict.

-

@tbowmo I have Nvidia GT1030 running yolo object detection on camera feeds with 7fps on full model and 20-30fps on yolo-tiny. Considering you don't need to process every frame your cameras produce and 1 or even 0.5 fps per camera is practical enough, you can get this thing going on a budget.